Building a website monitoring with Laravel (Part 1)

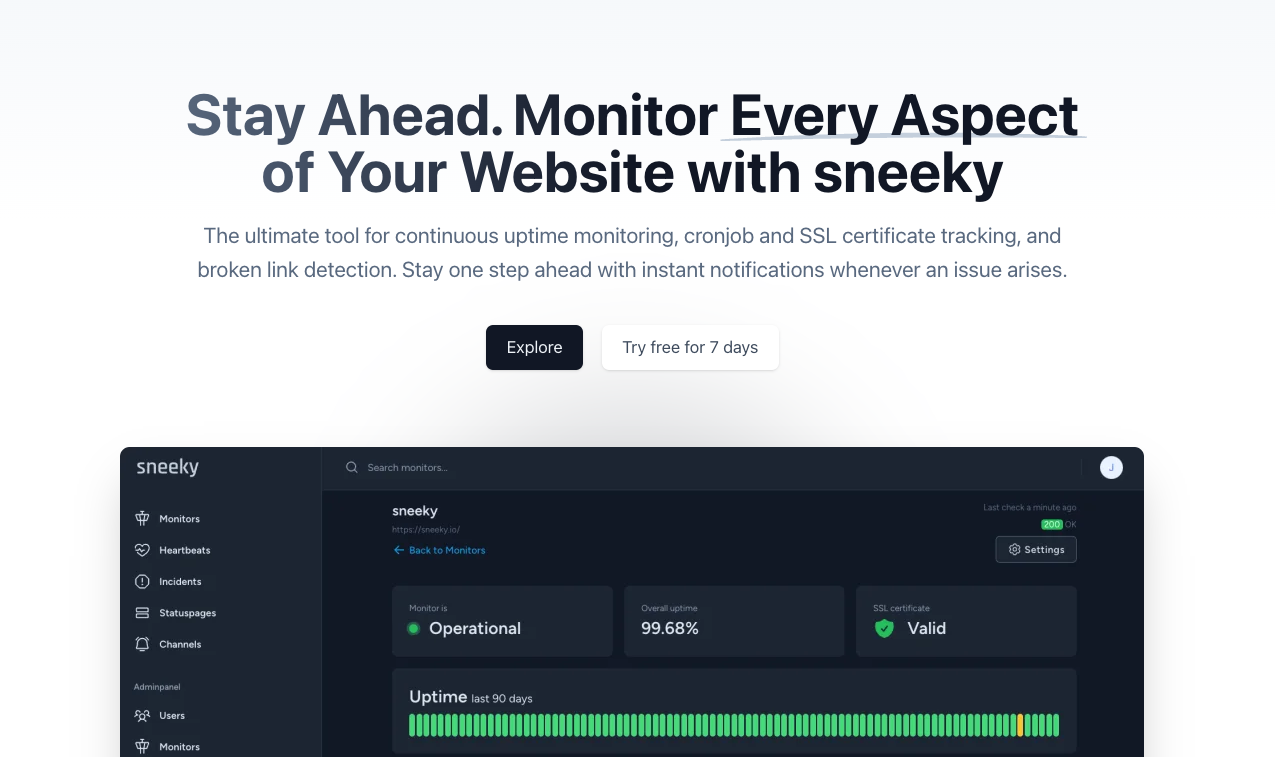

Join me on my journey of building a scalable website monitoring system with Laravel: sneeky.io

This should be the beginning of a series of articles to describe the creating and scaling of a feature rich website monitoring tool. Let’s see how far I can get.

As I began working as a full-stack web developer in a new company, I learned a lot in terms of maintaining a bunch of customer websites and apps. Not only have we a lot of servers to monitor but also websites. A lot of them. And every site has it’s own specialties when it comes to different requirements.

The one site needs to be in a specific range of response speed, another needs to be monitored from another location and a third needs to be checked for broken links regularly because of customers who want to place links by hand. And wouldn’t it be nice if all sites would report it’s own lighthouse scores every day?

As there are a ton of services to monitor your websites out there, none of them combined all necessary features for an acceptable price model. Also I find it extremely interesting, building a scalable system that can handle, let’s say hundreds of thousands, checks every day. So I built one: sneeky.

Designing and planning the application

For me, designing the application more or less means that I evaluate all wanted features and how I can accomplish to integrate the functions and implement it in a reliable system that works seamlessly together with other services and functions.

Features I wanted to implement

Basic uptime checks from different locations

I want to check the statuscode of a website from various locations as some sites may only be available in some countries. This system should also be easily extendable so I can integrate new servers in new regions automatically or in not time. These checks should be configurable to different intervals.

SSL certificate checks

The next important point of web monitoring is to check the SSL certificates regularly. For that I need a service that checks the certificates in different intervals. Important informations here would be the expire date and if an certificate is valid or not.

Broken link checks

I wanted to check every website for broken links, such as 404’s and 500’s as it is important to keep the user experience as smooth as possible. This should also be configurable to different intervals and be extensible for different types of links, such as mailto, tel, etc. So I need a crawler to visit each page and catch the statuscode.

Automated Lighthouse tests

Performance and accessibility are key for SEO. So that needs to be monitored as well. After a deployment or changes from customers we have to make sure that the performance and all the other relevant topics stay optimized. Here only one run a day should be enough.

Cronjob monitoring

In addition it should be possible to also monitor cronjobs as some tasks of our systems rely on perfectly timed cronjobs. So these cronjobs are part of the application and can cause damage if they don’t run.

Instant notifications for events

Of course we need to know if something is going wrong. So a notification system is needed to send notifications to various and multiple notification channels. From email, to discord, slack, etc.

Clear and shareable statuspages

We also need to see all informations of a specific websites performance, errors, events and downtimes. Therefor I wanted to implement a clear statuspage for each monitor to get all necessary informations in a single screen. For that we can also log the response times.

Team management

As such a service is especially interesting for agencies and companies who maintain more than two websites, we need the ability to work in teams and add more team members who can then operate in the system with different permissions. That is also important if you provide a two factor authentification.

Easy and affordable payment solution

This service needs servers, development and needs to be maintained. So it cannot be free to use at all. That's why it is important to have an easy and affordable payment solution, so that it is accessible to everyone and can be payed via an affordable subscription model.

Technical requirements

With all these features it is safe to say that we need an extremely reliable and extensible system to work on all these services. It should be able to run on different machines in different regions and with dedicated IP’s so that my customers can whitelist my check servers if necessary.

Another criteria is that it needs to be as cheap as possible at the beginning. I am a normal employee without much financial background. Therefore, cost-effective hosting and scalability options are essential to ensure the long-term success of the project.

So I came up with some technical requirements.

Dynamic queue system

We need a queue to handle all the load of the different services and jobs that partly run every 30 seconds. Paired with a flexible amount of workers to get all of the jobs done in a prioritized order. If we add a website, it should be initially checked instantly for a good user experience. So these types of checks need kind of a priority queue so it doesn’t queue up with all the standard jobs present in the queue.

Flexible worker structure

For all the different jobs that need to be processed we also need a bunch of workers. These workers should be easily deployable and flexible in terms of tasks they take from the queue. So for example the uptime check queue will be separated in different locations and different priorities. Every single worker can then be configured to fetch jobs from very specific queues.

Dedicated IP addresses for outgoing checks

This is definitely a big thing as some customers want to monitor sites behind a firewall. So they need to know the exact, not changing IP address of the uptime, ssl, broken links und lighthouse workers to be able to whitelist them.

The desired tech stack

All of the features and technical requirements are completely possible to implement with Laravel. Not only is it possible but also a great choice as Laravel has a queue system, notifications and scheduler on board. And because I’m used to Laravel there is not much extra stuff I have to learn besides some points like scaling queues and implement specific features.

With all these features and a bunch of other requirements I first came up with three different approaches to build the web monitoring system from scratch.

1. Serverless approach with Laravel Vapor

I wanted the system to be as scalable as possible and to use modern technologies that are reliable. I just wanted to have the possibility to throw millions of requests at the system and it should run as smoothly as possible.

While researching for this approach there quickly appeared some concerns that made me think a different way. There were too many downsides especially when it comes to flexibility.

As I mentioned earlier, I need dedicated IP’s for the outgoing checks because of my customers firewalls. In Vapor/AWS it costs a lot to maintain functions to run in different regions under dedicated IP addresses. That’s a huge downside.

I also need to be able to crawl customer websites. As the queue worker job execution by AWS is limited to something around 15 minutes I may need to run those checks on different machines. These are only the main points why this approach didn’t make it. There may be more like database limitations etc. So I decided to build the system with a different tech stack.

2. Kubernetes in a selfhosted cluster

As the first option would not really work well for my requirements I came up with a second idea: Building a kubernetes cluster and split my application in multiple microservices to perform on individual nodes.

I’m very interested in k8s but have little experience at this time. My thoughts always were in directions of scaling infinitely and be safe against system failures etc. This might sound like a bit of overkill for an application to start with, and it is.

Running a cluster with the main requirements of running specific tasks on specific nodes with dedicated IP’s may be possible but theres a lot to learn on the way to that.

Also there is not really a need to split the application in microservices as that would lead to a much higher maintenance expense because of different repos and different services I would need to maintain. Especially when I have the opportunity to build every single feature with Laravel and don’t have to split separate services into different languages, systems or frameworks.

So I just skipped this idea as well for the moment and would come back later if necessary.

3. Multiple worker server infrastructure

My third and final approach was to implement all features in a single Laravel app and use some built in services from Laravel combined with an multi server network for various kinds of workers.

So every single task will be stored in a centralized queue and different worker will fetch the jobs they are configured for. Every worker has it’s own server which can scale horizontally and every task can scale vertically by adding more worker servers automatically by load. So there are also multiple dedicated IP addresses that never change. The only thing is, that some will add to. But that can be communicated.

With this approach I have multiple pro arguments:

1. Financial planning

I know anytime how much server are running and how much they cost me in the end of the day. There is no cost per request or per worker running minute or nodes or function seconds etc.

2. Easy scalability and flexibility

I can spin up more workers per task in minutes and even automatically. If a group of workers is too busy with a specific task, I can add more workers to the group and split the jobs. I can even scale up single workers while others are running and doing their job. I can also cut down single workers if the current load can be processed by less workers.

3. Reduced complexity

I don't have to worry about different languages, frameworks or systems. I can manage all tasks in the same language, which is PHP/Laravel, and don't have to split separate services into different systems. This makes it easier to maintain and debug the code in the long run.

The final stack

So I decided to go entirely with Laravel and outsource critical parts to other frameworks if needed. I want to develop the core system at first and design and build the frontend on top of that. With Laravel I also have many possibilities in the frontend. I can build it directly with Livewire (maybe even version 3) or I can build a separate frontend with Vue or Svelte. I can even combine it with Inertia. Great prospects!

This way, I can easily manage all tasks in a single Laravel app, while being able to scale horizontally and vertically as needed with the clue that I have to maintain just a single application. I can assign each single task to a different worker group and they automatically split the load thanks to Laravel Horizon. An epic package for Laravel to maintain a large queue system.

It is also possible to deploy new workers on any server in any country of the world to be able to support as much check locations as possible. For example one worker could run on Hetzner, another on Digitalocean and another on Linode. One can run in Singapore, London and Toronto and another in your living room. You get point.

In conclusion, having multiple dedicated IP addresses that never change, combined with the ability to scale horizontally and vertically, makes this a cost effective and efficient approach for managing tasks.

That’s it for today. In the next part I will continue to document the journey of building sneeky.